Part 2: Implementing Effective Repositories in Go & Confronting the Critiques

(Recap: In Part 1, we explored the challenges of data access in Go, defined the Repository Pattern, and compared it to raw SQL and ORMs, setting the stage for a more structured approach. If you missed it, you can catch up here.)

Table of Contents (Part 2)

- The 3 AM Database Panic

-

Code Deep Dive: Building a Manual Go Repository

- Defining the Domain Model

- Crafting the Repository Interface

- The

pgxImplementation: Step-by-Step - Observations on the Manual Approach

-

Why This Changes Everything: The Concrete Advantages

- Advantage 1: Enhanced Separation of Concerns (SoC)

- Advantage 2: Dramatically Improved Testability

- Advantage 3: Increased Flexibility & Maintainability

- Advantage 4: Centralized Data Access Logic

-

The Honest Truth: Acknowledging the Downsides

- Disadvantage 1: Boilerplate Code

- Disadvantage 2: Potential for Over-Abstraction

-

Confronting the Critics: A Go Developer’s Response

- Critique 1: Hiding Native ORM Features

- Critique 2: „Deep Abstractions Kill Flexibility“

- Critique 3: SOLID Violations

- Critique 4: Unit Testing Isn’t That Much Easier

- Critique 5: Leaky Abstraction

- Critique 6: CQRS Doesn’t Fit Well

- Critique 7: The „Swappability“ Stories

- Critique 8: Performance Anti-Patterns

- The Verdict: Context Matters

- The Path Forward: Setting Up for Success

- What’s Next: The

sqlcRevolution

The 3 AM Database Panic

It’s 3 AM. Your phone buzzes with that dreaded sound. Production is down.

„The payment service is throwing database errors,“ your teammate types frantically in Slack. „I can’t figure out where the bug is, there are SQL queries scattered across twelve different files.“

You’ve been here before. What started as a simple microservice has become a web of embedded SQL, making debugging feel like archaeological excavation. Each fix breaks something else. Each test requires spinning up a full database. Each new feature means copying and pasting similar queries across multiple handlers.

This is the moment when clean architecture stops being academic and starts being survival.

In Part 1, we explored the theoretical foundations of the Repository Pattern through our story of three teams tackling the same problem with different approaches. Now it’s time to get our hands dirty. We’re going to build a repository from scratch, understand exactly why it solves the 3 AM panic scenario, and address the skeptics who argue it’s unnecessary complexity.

By the end of this article, you’ll never again wonder if repositories are worth the effort, you’ll wonder how you survived without them.

Code Deep Dive: Building a Manual Go Repository

To truly appreciate what tools like sqlc (which we’ll cover in Part 3) bring to the table, it’s essential to first understand how to build a repository manually. This exercise highlights both the structural benefits of the pattern and the boilerplate that sqlc aims to eliminate. We’ll use pgx/v5 with a PostgreSQL database.

Let’s build this step by step, just like you would in a real project.

Defining the Domain Model

First, let’s define our application’s representation of a user. This struct lives in your domain layer and represents your business entity, not your database table.

// models/user.go

package models

import "time"

// User represents a user in our application domain.

type User struct {

ID int64 `json:"id"`

Name string `json:"name"`

Email string `json:"email" binding:"required,email"` // Example with validation tags

CreatedAt time.Time `json:"created_at"`

UpdatedAt time.Time `json:"updated_at"`

}

💡 Pro Tip: Keep your domain models focused on business logic, not database concerns. The

jsontags are for API serialization, andbindingtags can be used with validation libraries likegin’s validator.

Crafting the Repository Interface

Next, we define the contract for our user data operations. This interface is what your service layer will depend on—and what makes testing a breeze.

// domain/repositories/user_repository.go

package repositories

import (

"context"

"yourapp/models" // Adjust path to your models package

)

// UserRepository defines the interface for user data operations.

// This is the contract that our service layer depends on.

type UserRepository interface {

Create(ctx context.Context, user *models.User) error

GetByEmail(ctx context.Context, email string) (*models.User, error)

GetByID(ctx context.Context, id int64) (*models.User, error)

// Future methods could include:

// Update(ctx context.Context, user *models.User) error

// Delete(ctx context.Context, id int64) error

// ListActiveUsers(ctx context.Context, limit int) ([]models.User, error)

}

Key Design Decisions:

-

context.Context: Standard Go practice for cancellation, deadlines, and request-scoped values -

*models.UserforCreate: Allows the repository to set database-generated fields likeID,CreatedAt, andUpdatedAtback onto the user object -

(*models.User, error)for Getters: If a user isn’t found, we’ll returnnilfor the user and a domain-specific error

The pgx Implementation: Step-by-Step

Now comes the real work—implementing UserRepository using pgx to interact with PostgreSQL. This code would typically reside in your infrastructure/persistence layer.

// infrastructure/persistence/postgres/pgx_user_repository.go

package postgres

import (

"context"

"errors"

"fmt"

"log" // In a real app, use a structured logger like slog or zerolog

"yourapp/models" // Adjust to your models path

"yourapp/domain/repositories" // Adjust to your interface path

"github.com/jackc/pgx/v5"

"github.com/jackc/pgx/v5/pgconn"

"github.com/jackc/pgx/v5/pgxpool"

)

// Custom domain-specific errors (can be defined in a shared errors package)

var (

ErrUserNotFound = errors.New("user not found")

ErrUserEmailExists = errors.New("user with this email already exists")

)

// pgxUserRepository implements the UserRepository interface using pgx.

type pgxUserRepository struct {

dbpool *pgxpool.Pool

}

// NewPgxUserRepository creates a new instance of pgxUserRepository.

func NewPgxUserRepository(dbpool *pgxpool.Pool) repositories.UserRepository {

return &pgxUserRepository{dbpool: dbpool}

}

// Create inserts a new user into the database.

func (r *pgxUserRepository) Create(ctx context.Context, user *models.User) error {

query := `

INSERT INTO users (name, email)

VALUES ($1, $2)

RETURNING id, created_at, updated_at`

// .Scan() will write the returned id, created_at, updated_at back into the user pointer

err := r.dbpool.QueryRow(ctx, query, user.Name, user.Email).Scan(

&user.ID,

&user.CreatedAt,

&user.UpdatedAt,

)

if err != nil {

var pgErr *pgconn.PgError

if errors.As(err, &pgErr) {

// PostgreSQL error code for unique_violation (e.g., duplicate email)

// See: https://www.postgresql.org/docs/current/errcodes-appendix.html

if pgErr.Code == "23505" {

return fmt.Errorf("%w: %s", ErrUserEmailExists, user.Email)

}

}

log.Printf("PgxUserRepository.Create: failed to insert user %s: %v", user.Email, err)

return fmt.Errorf("failed to create user: %w", err)

}

return nil

}

// GetByEmail retrieves a user by their email address.

func (r *pgxUserRepository) GetByEmail(ctx context.Context, email string) (*models.User, error) {

query := `

SELECT id, name, email, created_at, updated_at

FROM users

WHERE email = $1`

var user models.User

err := r.dbpool.QueryRow(ctx, query, email).Scan(

&user.ID,

&user.Name,

&user.Email,

&user.CreatedAt,

&user.UpdatedAt,

)

if err != nil {

if errors.Is(err, pgx.ErrNoRows) {

return nil, ErrUserNotFound // Return our domain-specific error

}

log.Printf("PgxUserRepository.GetByEmail: failed to get user %s: %v", email, err)

return nil, fmt.Errorf("failed to get user by email: %w", err)

}

return &user, nil

}

// GetByID retrieves a user by their unique ID.

func (r *pgxUserRepository) GetByID(ctx context.Context, id int64) (*models.User, error) {

query := `

SELECT id, name, email, created_at, updated_at

FROM users

WHERE id = $1`

var user models.User

err := r.dbpool.QueryRow(ctx, query, id).Scan(

&user.ID,

&user.Name,

&user.Email,

&user.CreatedAt,

&user.UpdatedAt,

)

if err != nil {

if errors.Is(err, pgx.ErrNoRows) {

return nil, ErrUserNotFound // Domain-specific error

}

log.Printf("PgxUserRepository.GetByID: failed to get user with id %d: %v", id, err)

return nil, fmt.Errorf("failed to get user by id: %w", err)

}

return &user, nil

}

// Compile-time check to ensure pgxUserRepository satisfies UserRepository.

var _ repositories.UserRepository = (*pgxUserRepository)(nil)

Observations on the Manual Approach

Look at what we just built. Even for these simple CRUD operations, several patterns emerge:

- SQL Strings in Go Code: Queries are embedded as strings. This is prone to typos and syntax errors only caught at runtime

-

Manual

Scan()Calls: The order and number of arguments inScan()must exactly match the columns in theSELECTstatement. Any discrepancy leads to runtime errors or subtle data corruption -

Error Handling Dance: We must explicitly check for

pgx.ErrNoRowsand map it to our domain-specificErrUserNotFound. Handling other database errors (like unique constraint violations) also requires specific PostgreSQL knowledge - Repetitive Boilerplate: Even for these simple operations, there’s noticeable repetitive code for query execution, scanning, and error mapping

This manual implementation gives us the architectural benefits of the Repository Pattern, but the implementation details are verbose and error-prone. This is exactly why sqlc will feel like magic in Part 3.

Why This Changes Everything: The Concrete Advantages

„But wait,“ you might say, „that’s a lot of code for simple database operations. Why not just put the SQL directly in my handlers?“

Let me show you why this seemingly extra work transforms how you build and maintain Go applications.

Advantage 1: Enhanced Separation of Concerns (SoC)

Remember our 3 AM debugging scenario? Here’s the architecture that prevents it:

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ HTTP Handler │ │ Service Layer │ │ Repository │

│ │───▶│ │───▶│ Interface │

│ • Parse Request │ │ • Business Logic│ │ • GetByID() │

│ • Validate │ │ • Orchestration │ │ • Create() │

│ • Serialize │ │ • Error Handling│ │ • GetByEmail() │

└─────────────────┘ └─────────────────┘ └─────────────────┘

│

▼

┌─────────────────┐

│ PostgreSQL Impl │

│ │

│ • SQL Queries │

│ • Error Mapping │

│ • Type Scanning │

└─────────────────┘

| Aspect of SoC | Benefit | 3 AM Impact |

|---|---|---|

| Business Logic Isolation | Service layers focus purely on business rules, unaware of persistence details | Bug in user creation? Check the service. SQL error? Check the repository. |

| Data Access Encapsulation | All database interaction logic is contained within the repository | One place to look for all user-related SQL queries |

| Independent Evolution | Changes to database schema primarily affect the repository, not the entire service layer | Schema change affects 1 file, not 12 scattered handlers |

| Clearer Team Responsibilities | Different developers can focus on business logic vs. data access optimization | Junior dev works on business logic, senior dev optimizes queries |

Advantage 2: Dramatically Improved Testability

This is where repositories truly shine. Because services depend on repository interfaces, unit testing becomes clean and focused.

The Magic: Interface-Based Testing

// services/user_service_test.go

package services_test

import (

"context"

"testing"

"time"

"yourapp/models"

"yourapp/domain/repositories"

"yourapp/services"

"errors"

"github.com/stretchr/testify/assert"

"github.com/stretchr/testify/mock"

)

// MockUserRepository - our test double

type MockUserRepository struct {

mock.Mock

}

func (m *MockUserRepository) Create(ctx context.Context, user *models.User) error {

args := m.Called(ctx, user)

// Simulate DB setting fields

if args.Error(0) == nil {

user.ID = 123

user.CreatedAt = time.Now()

user.UpdatedAt = time.Now()

}

return args.Error(0)

}

func (m *MockUserRepository) GetByEmail(ctx context.Context, email string) (*models.User, error) {

args := m.Called(ctx, email)

if user, ok := args.Get(0).(*models.User); ok {

return user, args.Error(1)

}

return nil, args.Error(1)

}

func (m *MockUserRepository) GetByID(ctx context.Context, id int64) (*models.User, error) {

args := m.Called(ctx, id)

if user, ok := args.Get(0).(*models.User); ok {

return user, args.Error(1)

}

return nil, args.Error(1)

}

// Test the happy path

func TestUserService_Register_Success(t *testing.T) {

mockRepo := new(MockUserRepository)

userService := services.NewUserService(mockRepo) // Inject our mock

testUser := &models.User{Email: "test@example.com", Name: "Test User"}

// Configure the mock: when Create is called, return success

mockRepo.On("Create", mock.AnythingOfType("*context.emptyCtx"), testUser).Return(nil)

// Execute the service method

createdUser, err := userService.RegisterNewUser(context.Background(), "Test User", "test@example.com")

// Verify results

assert.NoError(t, err)

assert.Equal(t, int64(123), createdUser.ID) // ID set by mock

mockRepo.AssertExpectations(t) // Verify Create was called

}

// Test the error path

func TestUserService_Register_EmailExists(t *testing.T) {

mockRepo := new(MockUserRepository)

userService := services.NewUserService(mockRepo)

// Configure mock to return our domain error

mockRepo.On("Create", mock.AnythingOfType("*context.emptyCtx"), mock.AnythingOfType("*models.User")).

Return(repositories.ErrUserEmailExists)

// Execute the service method

_, err := userService.RegisterNewUser(context.Background(), "Test User", "existing@example.com")

// Verify error handling

assert.Error(t, err)

assert.True(t, errors.Is(err, repositories.ErrUserEmailExists))

mockRepo.AssertExpectations(t)

}

The Result: Tests that run in milliseconds, require no database setup, and test your business logic in isolation. When you’re debugging at 3 AM, these fast, reliable tests become your best friend.

Advantage 3: Increased Flexibility & Maintainability

The interface-based approach opens up powerful possibilities:

Swap Implementations with Zero Service Changes:

// Production: PostgreSQL

userRepo := postgres.NewPgxUserRepository(dbpool)

// Testing: In-memory

userRepo := memory.NewInMemoryUserRepository()

// Development: SQLite for fast local setup

userRepo := sqlite.NewSQLiteUserRepository(sqliteDB)

// The service doesn't care which one you use

userService := services.NewUserService(userRepo)

Add Caching with the Decorator Pattern:

type cachingUserRepository struct {

next repositories.UserRepository

cache *redis.Client

}

func (r *cachingUserRepository) GetByID(ctx context.Context, id int64) (*models.User, error) {

// Check cache first

if cached := r.getCachedUser(id); cached != nil {

return cached, nil

}

// Fall back to database

user, err := r.next.GetByID(ctx, id)

if err == nil {

r.cacheUser(user)

}

return user, err

}

Advantage 4: Centralized Data Access Logic

All SQL queries and data mapping logic for User entities live in one place. When you need to:

- Update a query for performance

- Handle a schema change

- Add logging or metrics

- Debug a data issue

You know exactly where to look. No more grep-ing through dozens of files hunting for that one SQL query.

The Honest Truth: Acknowledging the Downsides

I won’t sugarcoat this, the manual repository implementation we just built isn’t without its drawbacks. Let’s be honest about the costs:

Disadvantage 1: Boilerplate Code

Look at our pgxUserRepository again. Even for simple CRUD operations, there’s significant repetitive code:

- Writing SQL query strings (error-prone)

- Calling

dbpool.QueryRow()ordbpool.Query() - Manually scanning results with

row.Scan()(order-dependent, fragile) - Mapping between database rows and Go structs

- Handling

pgx.ErrNoRowsconsistently

This boilerplate is verbose and a common source of subtle bugs. Miss one field in a Scan() call? Runtime panic. Change the order of columns? Silent data corruption.

Disadvantage 2: Potential for Over-Abstraction

If you try to create overly generic repositories (like Save(entity interface{}) error), you lose type safety and create interfaces that are hard to use correctly.

The Go Way: Go encourages specificity over generics. Tailored interfaces like UserRepository with explicit methods like GetActiveUsersByRegion() are preferred. This actually aligns perfectly with how sqlc will generate code in Part 3.

These disadvantages, especially the boilerplate, are precisely what sqlc will help us solve. But first, let’s address the critics.

Confronting the Critics: A Go Developer’s Response

The Repository Pattern has vocal critics. In particular:

-

Mesut Ataşoy’s Medium article “The Dark Side of Repository Pattern: A Developer’s Honest Journey”

🔗 https://medium.com/@mesutatasoy/the-dark-side-of-repository-pattern-a-developers-honest-journey-eb51eba7e8d8 -

Anthony Alaribe’s X post “A challenge I have with the repository pattern…”

🔗 https://x.com/tonialaribe/status/1755636332141908207

Let’s address their concerns head-on with a Go-specific perspective.

Critique 1: Hiding Native ORM Features

The Claim:

“It hides native ORM features like change tracking, eager/lazy loading, and raw-SQL execution, forcing you to reinvent them poorly.”

— Mesut Ataşoy, The Dark Side of Repository Pattern

Go Reality: In our pgx implementation, we’re not hiding an ORM—we’re using a database driver directly. If PostgreSQL offers a feature accessible via pgx (like CopyFrom for bulk inserts), our repository can expose it through its interface.

// Nothing stops us from exposing PostgreSQL-specific features

type UserRepository interface {

Create(ctx context.Context, user *models.User) error

BulkCreate(ctx context.Context, users []models.User) error // Uses pgx.CopyFrom

GetByID(ctx context.Context, id int64) (*models.User, error)

}

With sqlc (Part 3), this becomes even less of an issue since you write raw SQL, nothing is hidden.

Critique 2: Deep Abstractions Kill Flexibility

The Claim:

“Repositories often add unnecessary layers of abstraction, forcing you to re-implement filtering, eager-loading, and transaction management in your own code.”

— Mesut Ataşoy

Go Perspective: Go’s philosophy discourages overly generic repositories. Instead of GetAll(filters interface{}), we build specific methods:

type UserRepository interface {

GetActiveUsersByRegion(ctx context.Context, region string) ([]models.User, error)

GetUsersRegisteredAfter(ctx context.Context, date time.Time) ([]models.User, error)

GetUserWithOrderHistory(ctx context.Context, id int64) (*models.UserWithOrders, error)

}

Each method is backed by a specific, optimized SQL query. Transaction management happens at the service layer, with repositories participating explicitly.

Critique 3: SOLID Violations

The Claim:

“Many implementations violate SRP by mixing data access, mapping and querying logic in one class.”

— Mesut Ataşoy

Go Analysis:

-

SRP: Our

pgxUserRepositoryhas one responsibility—user data access viapgx. The mapping (scanning database rows to structs) is part of this data access concern. - Open/Closed & Interface Segregation: Go’s focused interfaces help here. Add new queries by extending the interface, not modifying existing methods.

-

Dependency Inversion: Services depend on

UserRepository(abstraction), notpgxUserRepository(concrete implementation). This is proper DIP.

Critique 4: Unit Testing Isn’t That Much Easier

The Claim:

“You still have to mock complex repository behavior—better off spinning up an in-memory DB.”

— Mesut Ataşoy

Go Reality: Our testing example shows Go’s interface-based mocking is straightforward and powerful for service-layer unit tests. Integration tests with real databases are complementary—they test different concerns:

- Unit tests (with mocks): Test business logic, error handling, edge cases

- Integration tests (with real DB): Test SQL correctness, schema compatibility, performance

Both are valuable. Repositories enable focused unit tests that were impossible with embedded SQL.

Critique 5: Leaky Abstraction

The Claim:

“You still end up handling transactions, DB-specific errors, performance tweaks—all through that repository layer.”

— Mesut Ataşoy

Go Perspective: With explicit pgx usage, database-specific features are conscious choices, not hidden surprises. We explicitly handle PostgreSQL error codes because we want that level of control.

// We choose what to expose vs. what to abstract

func (r *pgxUserRepository) Create(ctx context.Context, user *models.User) error {

// We handle PG-specific error codes explicitly

var pgErr *pgconn.PgError

if errors.As(err, &pgErr) && pgErr.Code == "23505" {

return ErrUserEmailExists // Abstract to domain error

}

// Other errors bubble up with context

return fmt.Errorf("failed to create user: %w", err)

}

Critique 6: CQRS Doesn’t Fit Well

The Claim:

“The repository pattern forces you to use the same interface for both reads and writes, making CQRS harder to implement cleanly.”

— Mesut Ataşoy

Go Solution: Define separate interfaces when implementing CQRS:

type UserCommandRepository interface {

Create(ctx context.Context, user *models.User) error

Update(ctx context.Context, user *models.User) error

Delete(ctx context.Context, id int64) error

}

type UserQueryRepository interface {

GetByID(ctx context.Context, id int64) (*models.User, error)

GetByEmail(ctx context.Context, email string) (*models.User, error)

SearchUsers(ctx context.Context, criteria SearchCriteria) ([]models.User, error)

}

The pattern doesn’t prevent this separation—it enables it.

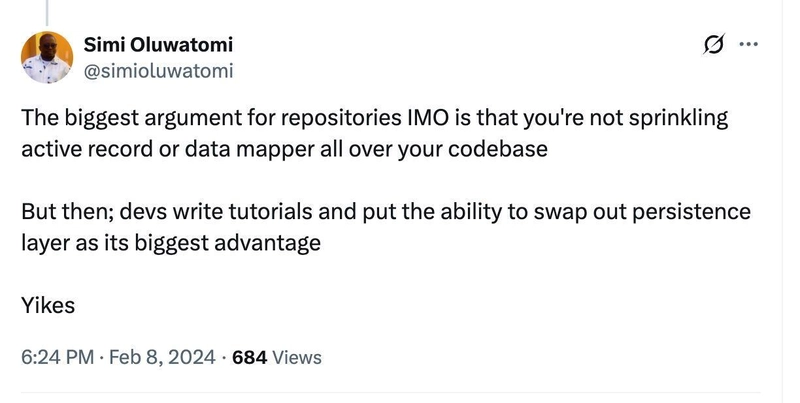

Critique 7: The „Swappability“ Stories

The Claim:

“The biggest argument for repositories, IMO, is that you’re not sprinkling Active Record/Data Mapper all over your codebase

But then devs write tutorials and put ‘swappable persistence’ as the main advantage. Yikes.”

— @simioluwatomi

This Criticism is Valid—but misses the real benefits. The primary wins aren’t about swapping PostgreSQL for MongoDB (which would require fundamental architecture changes anyway). The real benefits are:

Potential Daily Development Wins:

- Testing: SQLite for fast tests, PostgreSQL for integration tests

- Development: In-memory repositories for rapid prototyping

- Environment Adaptation: Different connection pooling, caching strategies, or even managed database services

The Core Win: As the critic notes, the biggest advantage is not sprinkling data access logic throughout your business services. Centralized data logic is the real benefit, not hypothetical database swapping.

Critique 8: Performance Anti-Patterns

The Claim:

“You tend to make too many DB calls or fetch more data than needed because engineers reuse overly generic getters instead of writing use-case-specific queries.”

— Anthony Alaribe“At scale, moving unneeded data over the network costs you latency and bandwidth. Making many separate DB calls which could have been a single query is expensive in terms of latency.”

This is a Critical Point, and represents an implementation anti-pattern, not a pattern flaw:

❌ Anti-Pattern (Don’t Do This):

type UserRepository interface {

GetUser(id int64, includes ...string) (*User, error) // Too generic!

}

// Results in inefficient reuse

user := userRepo.GetUser(123, "orders", "preferences", "activity_log") // Over-fetching

name := user.Name // Only needed the name!

✅ Best Practice (Do This):

type UserRepository interface {

GetUserProfile(ctx context.Context, id int64) (*UserProfile, error)

GetUserNameForDisplay(ctx context.Context, id int64) (string, error)

GetUserWithRecentOrders(ctx context.Context, id int64) (*UserWithOrders, error)

}

Each method maps to a specific, optimized SQL query that fetches only what’s needed. sqlc naturally encourages this approach since you write specific SQL for each generated method.

The Verdict: Context Matters

Many „dark side“ arguments target heavy, generic ORM-backed repositories or stem from poor implementation practices. Go’s philosophy of explicit interfaces, combined with tools like sqlc, significantly mitigates these concerns.

The key is:

Specific, purpose-built repository methods

backed by

Explicit, optimized SQL—exactly what we’ll achieve in Part 3.

That’s how you keep your Go API clean, performant, and testable.

The Path Forward: Setting Up for Success

We’ve built a working repository, seen its benefits in action, and addressed the critics. Our manual pgxUserRepository demonstrates the architectural value of the pattern while highlighting the boilerplate burden.

This positions us perfectly for Part 3, where sqlc will eliminate the grunt work while preserving all the architectural benefits we’ve gained.

What’s Next: The sqlc Revolution

In Part 3: sqlc to the Rescue! Building Type-Safe & Efficient Data Access in Go, we’ll witness a transformation:

What We’ll Cover:

-

sqlcIntroduction: Philosophy, installation, and basic workflow -

Dramatic Refactoring: Converting our manual repository to use

sqlc-generated code - Advanced Features: Complex queries, transactions, custom types, and edge cases

- Production Patterns: Error handling, testing strategies, and performance optimization

-

The Complete Picture: Bringing together repositories,

sqlc, and Go best practices

The Payoff: By the end of Part 3, you’ll have a data access layer that’s:

- ✅ Type-safe: Compile-time guarantees instead of runtime panics

- ✅ Performant: Hand-tuned SQL with zero ORM overhead

- ✅ Maintainable: Clear separation of concerns with minimal boilerplate

- ✅ Testable: Easy mocking and focused unit tests

- ✅ Scalable: Patterns that grow with your application

Get ready to see how sqlc transforms Go database development from tedious to delightful.

What’s your experience with data access patterns in Go? Have you tried repositories, or do you prefer embedding SQL directly in services? Share your thoughts and challenges in the comments—your insights help the entire Go community learn and grow together.

Series Navigation:

- ← Part 1: The Pursuit for Clean Data Access

- Part 2: You are here

- Part 3: Coming soon…