From Generative to Agentic AI: What It Means for Data Protection and Cybersecurity

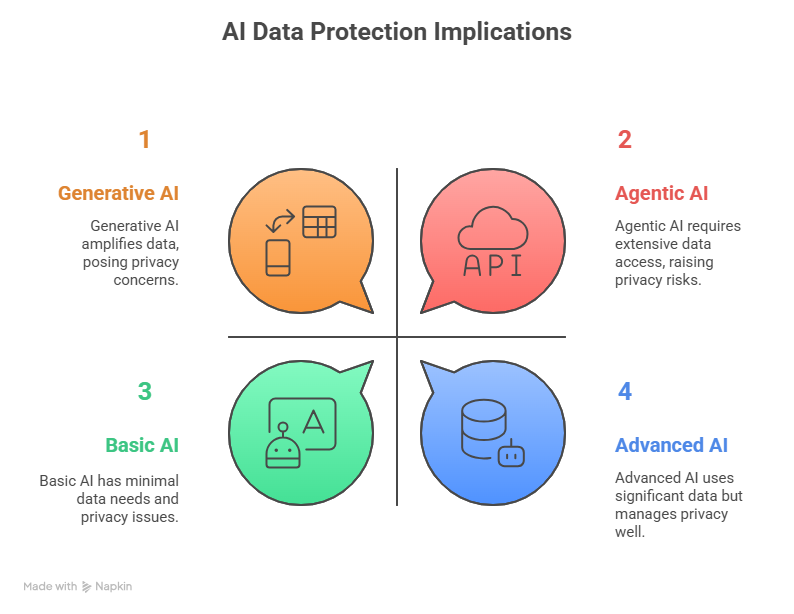

As artificial intelligence continues its rapid evolution, two terms dominate the conversation: generative AI and the emerging concept of agentic AI. While both represent significant advancements, they carry very different implications for businesses, particularly when it comes to data protection and cybersecurity.

This article unpacks what each technology means, how they differ, and what their rise signals for the future of digital trust and security.

What Is Generative AI?

Generative AI refers to systems designed to create new outputs-such as text, images, code, or even music-by identifying and replicating patterns from large datasets. Models like GPT or DALLE learn linguistic or visual structures and then generate new content in response to user prompts. These systems are widely applied in areas such as content creation, customer service chatbots, design prototyping, and coding assistance. Their strength lies in efficiency, creativity, and scalability, allowing organizations to produce human-like outputs at unprecedented speed. At the same time, generative AI comes with challenges: it can hallucinate information, reinforce existing biases, raise intellectual property concerns, and spread misinformation. Ultimately, its value lies in amplifying creativity and productivity, but its risks remain tied to the quality and accuracy of the data it learns from.

What Is Agentic AI?

Agentic AI represents the next step in the evolution of artificial intelligence. Unlike generative AI, which produces outputs in response to prompts, agentic AI is designed to plan, decide, and act with a degree of autonomy. These systems operate within defined goals and can execute tasks independently, reducing the need for constant human intervention. For example, an AI sales agent might not only draft outreach emails but also determine which clients to contact, schedule follow-ups, and refine its strategy based on responses. Core features of agentic AI include autonomy in decision-making, goal-directed behavior, and the capacity for reasoning and self-correction. In essence, agentic AI is less about imitation and more about delegation-taking on operational responsibilities that were once firmly in human hands.

The Key Differences between Generative and Agentic AI

While generative and agentic AI share the same foundation of machine learning, their scope and impact diverge in meaningful ways. Generative AI is primarily designed to create-whether that means drafting a report, generating code snippets, or producing digital artwork. Its outputs are guided by prompts, which means it remains largely dependent on human input to initiate and direct its function. By contrast, agentic AI is not confined to creation alone; it extends into decision-making and execution. These systems are goal-driven, capable of planning and acting with a level of autonomy that reduces the need for constant human oversight.

This difference also shifts the risk landscape. Generative AI’s challenges typically center on misinformation, bias, or reputational harm caused by inaccurate or inappropriate outputs. Agentic AI, however, raises operational and compliance concerns because of its ability to act independently. Errors, unintended actions, or the mishandling of sensitive data can have immediate and tangible consequences for organizations. In short, generative AI informs, while agentic AI intervenes-a distinction that carries significant implications for both data protection and cybersecurity.

Implications for Data Protection

Both forms of AI are only as strong as the data they consume-but their impact on privacy and compliance differs.

Data Dependency:Generative AI amplifies whatever it is trained on. Agentic AI requires real-time access to business and customer data, making accuracy and governance non-negotiable.

Privacy Challenges:Autonomy may push agentic AI to access sensitive data sets (emails, financial records, health data) without explicit human checks. This elevates risks under frameworks like GDPR, HIPAA, or CCPA.

Transparency and Trust:To maintain trust, businesses must build auditability and explainability into AI operations-ensuring data use can be traced and justified.

Cybersecurity Risks and Opportunities

The rise of agentic AI introduces a paradox for cybersecurity leaders: it is both a new threat vector and a defense mechanism.

Threats:

Malicious actors could exploit agentic AI to automate phishing, fraud, or denial-of-service attacks.

Autonomous execution increases the scale and speed of potential cyberattacks.

Opportunities:

AI agents can serve as always-on defenders, autonomously scanning for vulnerabilities, detecting anomalies, and neutralizing attacks in real time.

Generative AI can assist analysts by drafting threat reports or simulating attack patterns, while agentic AI can execute countermeasures.

The Double-Edged Sword:The same autonomy that makes agentic AI powerful also makes it dangerous if compromised. A hijacked AI agent could cause damage far faster than a human adversary alone.

What’s Next for Cybersecurity in the Age of Agentic AI?

The next wave of cybersecurity will be shaped by how organizations choose to govern AI autonomy. Three priorities stand out as critical for balancing innovation with safety.

1. Stronger Governance FrameworksClear accountability for AI actions is essential. Organizations must define who is responsible for outcomes, while also establishing protocols that ensure human oversight remains part of the process.

2. AI-on-AI Defense StrategiesAs adversaries increasingly weaponize AI, defensive AI agents will be needed to detect, counter, and neutralize threats in real time. Building resilience into systems requires assuming that attackers will also use autonomous tools.

3. Human-in-the-Loop ModelsDespite advances in autonomy, human judgment cannot be removed from high-stakes decisions. Retaining human authority in areas such as privacy, finance, and safety ensures that AI actions remain aligned with ethical and regulatory standards.

Conclusion

Generative AI changed the way businesses create. Agentic AI is poised to change the way businesses operate. But with greater autonomy comes greater responsibility: data protection and cybersecurity cannot remain afterthoughts.

Organizations that embed governance, transparency, and resilience into their AI strategies will not only mitigate risks but also build the trust needed to unlock AI’s full potential.

The post From Generative to Agentic AI: What It Means for Data Protection and Cybersecurity appeared first on Datafloq.